Blog post

The EU AI Act: At a glance

Initially proposed by the European Commission in April 2021, the long-awaited EU AI Act finally passed on March 13th, 2024. This comprehensive AI legislation, a first of its kind globally, is centred around a risk-based approach designed to safeguard individual rights. Under the Act, AI systems are categorized based on their associated risk levels, with regulatory requirements ranging from minimal obligations for low-risk systems to strict conformity assessments for high-risk applications. Although the negotiation phase between the European Commission, the European Council and the European Parliament has concluded, the technical standards which will guide industry practitioners are still being developed. The complexity, speed and unprecedented nature of emerging AI technologies is likely to make progress challenging.

Overview

To oversee the implementation of the AI Act, the European Commission has established an AI Office tasked with centralizing enforcement and collaborating closely with Member States’ governing bodies. The two-year implementation phase will begin with a six-month period for Member States to phase out prohibited AI systems. Most other rules are slated to take effect by 2026. The Act’s extensive legislative process unfolded against the backdrop of rapid advancements in machine learning technology, notably the release of ChatGPT by OpenAI in 2022. The race by technology companies for market share of Generative AI consumer applications prompted regulators and governments worldwide to reevaluate their approach to AI governance and policy.

The legislation was crafted amidst ongoing debates concerning the balance between fostering innovation and ensuring adequate regulation. Discussions around innovation and regulation often draw on regional stereotypes, with the EU perceived as advocating stricter regulation compared to its ostensibly more pro-innovation US counterpart.

Designing regulation based on a type of technology rather than sector-specific governance presents the challenge of ensuring its resilience to rapid technological advancements—a hurdle familiar to many regulators. The emergence of Generative AI consumer applications has propelled machine learning innovations at an unprecedented pace with frequent open-source and closed-source models releases. The Act has incorporated provisions to adapt to the evolving AI landscape, such as categorizing general-purpose AI models based on total computing power as a proxy for capabilities. Models trained using more than 10^25 FLOPs total computing power are deemed to carry systemic risk, with the AI Office empowered to adjust category thresholds in response to technological developments, including advances in computing power.

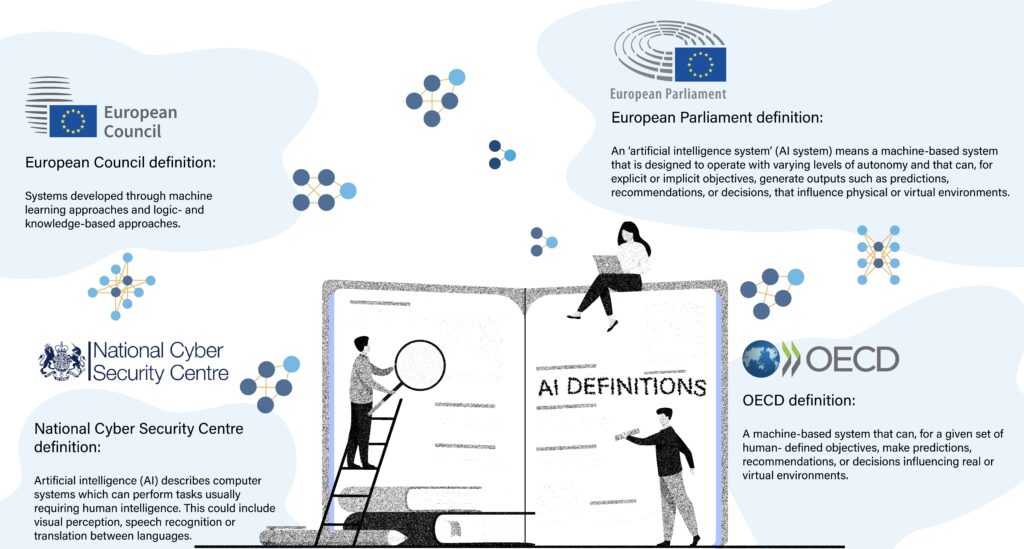

Definitions of AI systems vary across entities, including the European Council, European Comission, European Parliament, National Cyber Security Centre (UK government), and the OECD.

Regional approaches to AI governance and regulation

The EU AI Act aims to establish a harmonized approach to AI regulation within the European Union, promoting legal certainty and preventing fragmentation across Member States. The AI Act now joins a mix of other regimes which cover technology in the EU, including GDPR, the Digital Services Act and the Digital Markets Act etc. The AI Act is likely to have far-reaching implications and serve as a blueprint for other regions seeking to develop their own AI governance frameworks. Like GDPR, the AI act has extraterritorial reach, and some commentators are referencing the potential of a ‘Brussels effect’. The way US legislation evolves will likely be partially driven by companies lobbying to reduce the operational overhead of complying with disparate regional rules.

For now, there is a growing disparity between the way the EU is approaching AI governance compared to the current US approach. For now, AI in the US is still being governed through existing laws and regulations. For instance, in 2023 the Equal Employment Opportunity Commission (EEOC) ordered the iTutorGroup to pay $365,000 to settle a discriminatory hiring suit based off their AI software. More recently, the Federal Trade Commission (FTC) launched an inquiry into Generative AI investments and Partnerships relating to generative AI companies and major cloud service providers.

Despite disparity in approaches, there is an appetite for international alignment and interoperability. Indeed, the OECD Principles on Artificial Intelligence which aim to promote trustworthy AI was adopted by member countries in 2019. In May 2023 the G7 countries convened and established the G7 Hiroshima Artificial Intelligence Process. Most recently, on the 2nd of April, the US and UK agreed to deal to collaborate on developing safety evaluation frameworks of AI tools and systems.