AI and the mind

From the myth of Pygmalion to the stories of Isaac Asimov, scientists and artists have considered the boundaries of the artificial and the human.

“If you are failing at “regular” ethics, you won’t be able to embody AI ethics either. The two are not separate.”

Rachel Thomas – fast.ai

I am an experienced researcher, currently focused on machine learning and advanced analytics in financial risk management. My current research areas includes machine learning, cognitive science and technology policy and ethics. I am particularly interested in AI governance and policy. I also specialize in graphic design and illustration.

This website is a collection of articles, essays and illustrations I have made to explore the current themes and narratives in Cognitive Science, AI and Social Anthropology.

About me

“Our political conversations are happening on an infrastructure built for viral advertising”

"Building robust neural networks"

Building robust neural networks means going back to the basics - statistical processes and learning theory

Can rule-based systems learn?

The rise and fall of symbolic AI. What can symbolic AI contribute to modern learning systems.“Cognitive science is not so much a single discipline as a meeting ground for many different disciplines, all of which are concerned with the nature of the mind and the brain”

Margaret Boden – Cognitive Scientist

The pandemonium architecture

The pandemonium architecture is a cognitive model developed by Oliver Selfridge (1959). Selfridge used an illustration (see image on the right for my interpretation).

The demons illustrate the different stages in processing. The image demons and cognitive demons represent differentiation of individual features from a visual picture. The cognitive demons debate the relative importance of different features to the final classification of the object. Finally the decision demon concludes what the object is…in this instance it’s an image of the sun.

This bottom up view of feature processing, does not account for context driven object recognition such as pareidolia (seeing faces in things). How object-recognition processes that are based initially on low-level visual features become abstract concepts remains an open field of investigation.

“The human brain effortlessly reformats the 'blooming, buzzing, confusion' of unstructured visual data streams into powerful abstractions that serve high-level behavioural goals such as scene understanding, navigation, and action planning.”

Daniel Yamins – Computational Neuroscientist

Computational neuroscience and AI - visual processing

The hierarchical processing of the ventral visual pathways was revealed in Hubel and Weisel’s seminal 1962 experiments with cats’ eyes. They linked the retina to the cortex and exposed the orientation selectivity of neurons in the V1 region of the visual cortex. Since Hubel and Weisel’s study, a lot of research has been conducted investigating the theory that the ventral visual stream hierarchically processes increasingly complex feature representations – a process that peaks in feature complexity at the inferior temporal cortex.

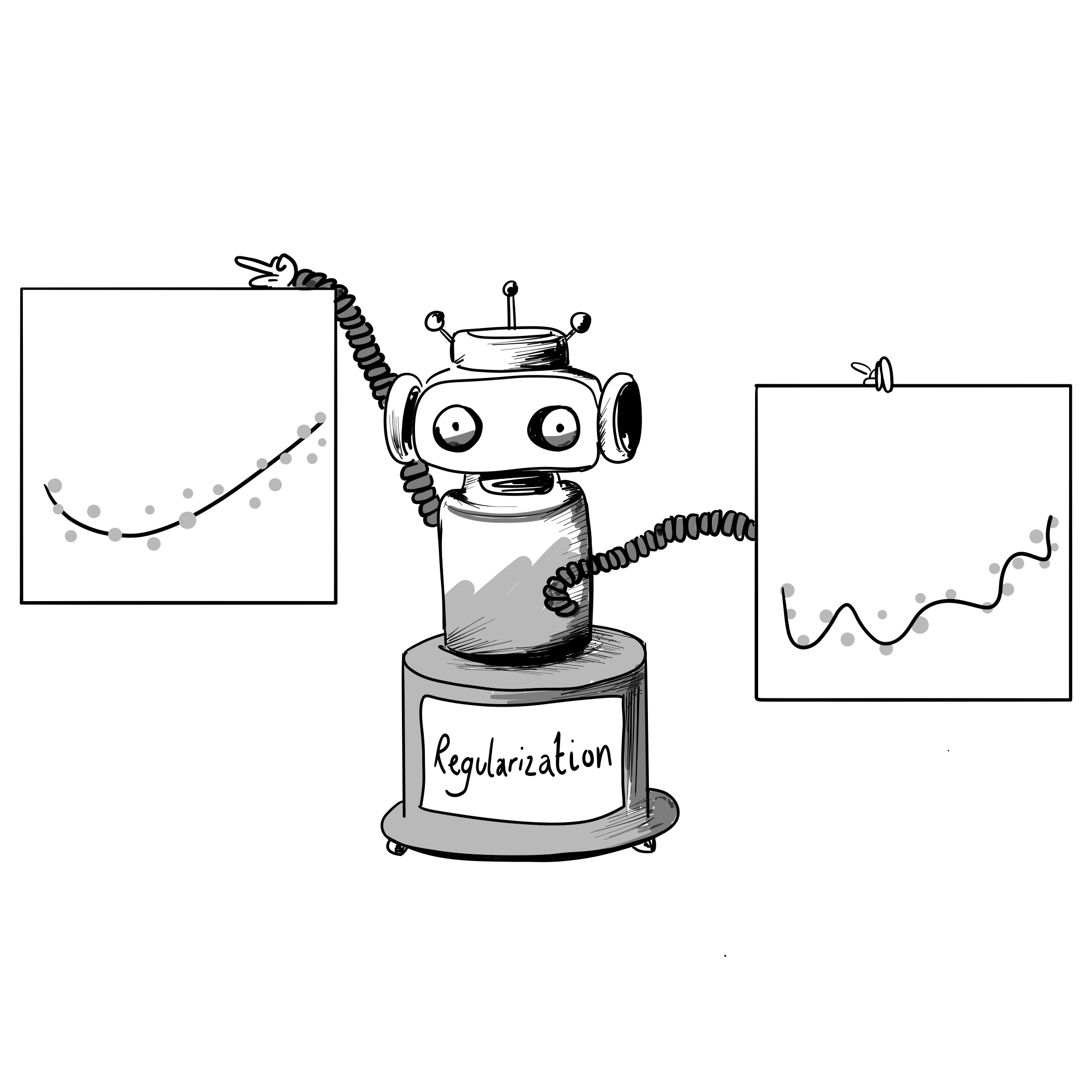

According to Yamins et al., the inferior temporal cortex can support “invariant object categorization” for a broad spectrum of tasks. This hierarchical processing is analogous to the series of stacked layers that form modern neural networks. In the case of convolutional neural networks (CNNs), feature maps are created through convolutions and sets of filters, which are activated by different features of varying levels of complexity. Yamins et al., suggest that the later layers in a CNN selectively process specific objects while becoming more tolerant to natural variability and noise in the image – the deeper CNN networks “correspond intuitively to architecturally specialized sub-regions like those observed in the ventral visual stream.”

“Turing thought the way to make progress was to focus on questions that could be objectively answered by watching what happens in the world, rather than taking refuge in talk of personal experiences that are necessarily hidden from external observation.”

Sean Carrol – Theoretical Physicist

"We cannot say that we can understand intelligence, the structure of the human mind, until we have a model that accounts for how it learns."

– Neches, Langley and Klahr